How can a recursive download be forced to continue, despite the storage exceptions that odrive may encounter, so that I can sync everything I want to download?

For large bulk downloads, if odrive encounters numerous exceptions, rate limits, or certain types of “critical” errors, it will abort the action instead of just blindly retrying to infinity. This can be frustrating if a user wants to download lots and lots of content, but the storage service is returning frequent errors.

For scenarios like these, it is possible to use the CLI to essentially perform a brute-force “download until done” action. It is not elegant, but it can be useful for some folks.

One of the reasons that odrive stops syncing in these cases is because some integrations can “react” to what they consider aggressive behavior (continually trying to download files that they cannot or will not provide at that time, continuing to make requests when they are having service issues, continuing to make requests when an API throttle has been imposed, etc…) and can then do things like block or severely throttle your account. Keep this in mind if you wish to proceed with this method.

To use the CLI commands on MacOS:

-

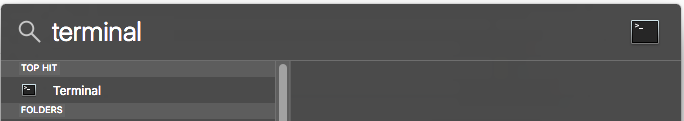

Open a terminal session (type “terminal” in Spotlight search):

-

Run the following command in the terminal session (copy & paste + Enter):

exec 6>&1;num_procs=3;output="go"; while [ "$output" ]; do output=$(find "[path to folder here]" -name "*.cloud*" -print0 | xargs -0 -n 1 -P $num_procs python $(ls -d "$HOME/.odrive/bin/"*/ | tail -1)odrive.py sync | tee /dev/fd/6); done

For the above command, be sure you replace “[path to folder here]” with the path of the folder you want to start the download from.

It is an ugly one-liner, but the above command will download everything in the folder using 3 concurrent workers. It won’t stop until everything has been downloaded.

To use the CLI commands on Linux:

In the terminal use the following command. Be sure you replace “[path to folder here]” with the path of the folder you want to start the download from:

exec 6>&1;num_procs=3;output="go"; while [ "$output" ]; do output=$(find "[path to folder here]" -name "*.cloud*" -print0 | xargs -0 -n 1 -P $num_procs "$HOME/.odrive-agent/bin/odrive" sync | tee /dev/fd/6); done

To use the CLI commands on Windows:

Open up a command prompt by clicking on the Windows icon in the taskbar, typing “cmd”, and then clicking on “Command Prompt”.

Once the command prompt is open, copy and paste the following command in (all one line) and hit enter. This will install the CLI for us to use in the next command:

powershell -command "& {$comm_bin=\"$HOME\.odrive\common\bin\";$o_cli_bin=\"$comm_bin\odrive.exe\";(New-Object System.Net.WebClient).DownloadFile(\"https://dl.odrive.com/odrivecli-win\", \"$comm_bin\oc.zip\");$shl=new-object -com shell.application; $shl.namespace(\"$comm_bin\").copyhere($shl.namespace(\"$comm_bin\oc.zip\").items(),0x10);del \"$comm_bin\oc.zip\";}"

Once the CLI is finished installing (it could take a minute or two), copy and paste this next command to perform a recursive sync of a folder. This command will download one file at a time until everything is downloaded. This is another long one-liner, so make sure you copy the whole thing:

powershell -command "& {$syncpath=\"[path to folder here]\";$syncbin=\"$HOME\.odrive\common\bin\odrive.exe\";while ((Get-ChildItem $syncpath -Filter \"*.cloud*\" -Recurse | Measure-Object).Count){Get-ChildItem -Path \"$syncpath\" -Filter \"*.cloud*\" -Recurse | % { & \"$syncbin\" \"sync\" \"$($_.FullName)\";}}}"

For the above command, be sure you replace “[path to folder here]” with the path of the folder you want to start the download from.

You can actually run multiple instances of this script to create parallelism by just opening additional cmd prompts and pasting the command in. You may see some messages about files no longer being available, and this is just because another script instance got to it first, but its not a problem. You can essentially “ramp up” or “ramp down” the download speed in this manner, at least to a certain extent.

Tony, could you please parse out that MacOS (linux) script? In particular, I don’t understand the use of fd 6 or why you’re looking for output. I get that find will generate the list of *.cloud* files and xargs will run sync in the cli in 3 parallel processes and put the output into $output. Is the point of running the same command multiple times that each synch will bring in new subdirectories? When exactly will the cli generate no output? Also, if you didn’t need the parallelism, could you just run the python…sync part in a “find -exec … {}…” Thanks.

Hi @allen,

This post goes through the command in more detail and through several iterations. It should provide the details you are looking for:

Running parallel processes will allow multiple concurrent downloads.